AI Risk – Full Report

16 April 2025

About this investigation

The central claim of this report is that current and especially future AI capabilities create very significant risks, and there are career paths to mitigate these risks.

This investigation has four main parts:

Christian perspectives on risks from AI

The capabilities of current and future AI systems

How AI works, its current capabilities, rate of progress, predictions about future AI capabilities, the implications of advanced AI

An overview of risks from AI

A brief outline of the benefits of advanced AI and an overview of various risks

An overview of solutions with a career path perspective

This is a shallow-moderate cause area investigation on AI risk.

The report gives a view of this problem area and possible ways of tackling it.

This investigation focuses specifically on AI risk.

It deals with the potential benefits of AI only in a cursory way.

It does not attempt an in-depth, balanced analysis of risks vs benefits.

This investigation was completed in March-April 2025.

As progress in AI development is currently very fast, this report can become outdated quickly.

Unless otherwise noted, all references like “now”, “currently”, etc. refer to March-April 2025.

Overall cause area assessment: Importance, neglectedness, tractability

Importance: global scale, potential impacts are huge

Neglectedness: relatively very neglected

The number of people working on AI capabilities vastly exceeds the number of people working on mitigating risks: In 2022, 80,000 Hours estimated that there were only around 300 people working on technical approaches to reducing existential risks from AI systems.

Tractability: tractable in principle, especially with governance, but tractability is unclear in practice because of uncertainties related to most approaches to tackling the issue.

Note that because the biggest risks from AI come from predicted future capabilities of AI systems, this assessment is inherently more uncertain than most other problem areas.

Christian perspectives

Foundations: good stewardship, love, and Christian hope

Good stewardship should ground the Christian approach to developing and governing AI.

The human capacity to create and use technology is part of God’s creation. As such, it falls under the requirements of good stewardship.

Technology is not morally neutral, so its creators and users bear accountability for ensuring it serves humanity in line with God’s will.

Across denominations, there is a strong call for guiding AI by moral and spiritual principles and promoting justice and the common good.

Examples include US evangelicals, the Southern Baptist Convention, Pope Francis, Pope Leo XIV and the Vatican, Greek Orthodox Patriarch Bartholomew and Russian Orthodox Patriarch Kirill

Love should direct how Christians approach the risks and benefits from AI.

Christian love extends beyond social boundaries. Thus, AI development and governance should pay attention to the benefit of all, not just a certain group of people.

AI development should not endanger or neglect the “least of these”. This highlights a call for equitable distribution of benefits from AI, avoiding bias against vulnerable groups, etc.

Love entails responsibility. This means the development of powerful technologies with great risks should proceed (or not proceed) guided by careful discernment instead of profit motives (see section below on questionable motives of AI)

Hope. In discussions of the future effects of AI, one can encounter both visions of AI bringing on a utopia and expectations that it will lead to destruction of all value in the universe. There is also often a sense that AI development is inevitable and cannot be stopped, paused, slowed down, or even regulated. Christian hope contrasts with all of these views.

Not giving in to a fatalism that sees AI development progressing along and inevitable path

Not replacing the hope of the world to come with hopes placed on AI creating a this-worldly utopia

Not giving in to dejection because of the risks of AI

Even in case AI present catastrophic risks, the words of Jesus about the frightening eschatological events preceding his return apply: “When these things begin to take place, stand up and lift up your heads, because your redemption is drawing near” (Luke 21:28 NIV).

Problematic motivations in AI development

Idolatry. Attempts to build superhuman AI are idolatrous if they are motivated by a desire to create a “god”.

Regardless of the intentions of the developers of AI systems, people could relate to them in an idolatrous way.

Creating AI to displace humans. Some leading AI scientists and developers have expressed views on AI displacing human that are deeply worrying from a Christian perspective, for example: (more details in footnote) [1]

calling AI humanity’s rightful heirs and the next step in cosmic evolution

saying it’s okay that AIs will supersede humans as the crown of creation

calling the succession to AI inevitable and saying humans should not resist AIs displacing them from existence

Hubris. AI could fit into the Tower of Babel pattern of people attempting to “reach the heavens” through a great project

Recklessness. It seems very un-Christian to rush ahead with AI development without regard to safety for reasons like:

a saviour complex (“I need to be the one who builds advanced AI and brings forth utopia!”)

disregard of risks (“AI utopia will be worth it even if I’m endangering everybody”)

desire for personal immortality, power, etc. (“I want a superhuman AI that can make me biologically immortal, so it needs to be created before I die, regardless of risks to everyone else.”)

chauvinistic interests (“my group must be the one with powerful AI because we matter more”)

Christian perspectives on risks touching particular areas of life

This section highlights Christian perspectives on specific risks.

It isn't intended as a comprehensive Christian commentary on all risks covered in this report—only those where the Christian viewpoint offers something distinctive.

Culture. If AI enables captivating bespoke entertainment, more addictive social media, virtual companions and other forms of tailored superstimuli (see more on these risks below), this could lead to people retreating more and more to curated personal worlds that stroke their passions and ego.

From a Christian perspective, this would be a very bad outcome. These and other aspects of advancing AI technology also present some risks to family life.

Freedom of religion. AI could empower authoritarian regimes through surveillance, censorship, and other means. This could enhance the persecution of Christians under some regimes.

AI tools are already used by authoritarian governments to track and repress Christians.

Values. Advanced AI systems could play an increasing role in many sectors of society and influence people’s opinions and values. If such AI systems end up with values that contradict Christianity, this could “lock in” unchristian values in society.

Peace. Advancing technology is usually put to military use. Many Christians have opposed nuclear weapons and advocated for disarmament.

Similarly, they could oppose AI-enhanced weapons or AI-enabled destructive technologies. God is the God of peace and use of technology that results in more death and destruction is against his will.

Particular risks include using AI in the generation of biological weapons, autonomous weapon systems and military decision-making (see more details below).

Justice. Rapidly advancing AI technology could lead to concentration of power and unequal distribution of the benefits of AI.

Work. Many people are also concerned about loss of jobs and loss of meaning in their lives because in case AI starts replacing humans in various tasks (currently AI is not generally a significant source of job loss).

Work is an integral part of God’s creation. Many Christian traditions also warn against idleness. If AI starts replacing an increasing amount of current human labour, the transition should be managed responsibly to avoid sudden mass unemployment and other socially and spiritually harmful consequences.

Christian objections to AI risk

This section deals with objections to AI risk that arise specifically from a Christian worldview. Objections related to technical, social, or other limitations are not discussed here because they are not unique to Christians.

Machines cannot be conscious or have a soul.

This objection is mostly irrelevant to the risks discussed in this report. They do not depend on whether advanced AI systems have subjective experiences or possess a soul.

However, this objection becomes relevant if it is assumed that consciousness or a soul is necessary for AI systems to exhibit capabilities that some of the risks are based on.

This is a controversial claim and does not seem to directly follow from Christian theology.

Assuming that modern AI systems don’t have a soul or aren’t conscious, it appears consciousness or a soul is not required for things like having humanlike conversations, creating passable visual art, computer programs, poetry, or music, the ability to answer PhD-level questions in various sciences and mathematics, etc. (see the section Current state of AI capabilities for details)—all tasks that current AIs are capable of.

Some of the risks outlined below could be realised without human-level general intelligence if AI systems become superhumanly capable in certain subdomains or if human-level or below systems generate negative societal effects.

God would not allow an AI catastrophe.

Some of the risks outlined below are catastrophic in scale and impact. Some Christians Christians might think God will not allow such catastrophic events to occur.

However, God has allowed catastrophic events to take place in the history of the world, such as the World Wars or pandemics like the Spanish Flu or bubonic plague, which have claimed tens of millions of lives.

Human extinction is something that many experts in AI risk warn about but which many Christians consider impossible because they believe a human extinction before the Second Coming of Christ conflicts with traditional Christian eschatology.

However, none of the risks outlined in this report depend on the possibility of human extinction.

The scenarios that are claimed to pose a risk of extinction could have extremely bad non-extinction results.

Under traditional eschatology, it is still possible for humanity to trigger an extinction event, but it would be stopped by the Second Coming —in a sense resulting in Doomsday nevertheless.

Believing that human extinction or a major catastrophe is not possible is not a reason to neglect the risks.

God may prevent catastrophes through human efforts. In the Bible, God often works through humans to enact his plans. For example, God saved the Israelites from their enemies through human leaders multiple times.

AI capabilities are developing rapidly

Current state of AI capabilities

Reading speed and comprehension. AI models can read an entire book’s worth of information in 30 seconds [2] and can learn to translate between English and a little-known New Guinean language on a human level based on a grammar book, short dictionary and a small set of example sentences with translations.

Text generation. A paper published in Nature tested if humans can tell AI-generated and human-generated poetry apart and found that they cannot and that they tended to prefer the AI-generated poems.

Conversational capabilities. ChatGPT-4 has been claimed to pass the Turing test—a test where humans have to judge whether they are interacting with a computer or another human. In one study, participants judged the GPT-4 AI to be human 54% of the time based on a 5-minute conversation, compared to 67% for actual humans.

Coding ability. According to a research paper submitted in February 2025, OpenAI’s newest model o3 achieves a gold medal at the 2024 International Olympiad in Informatics (IOI) and achieves elite human performance (better than 99.8% of participants) in the Codeforces competitive programming platform

Image generation. For a few years, AI models have been capable of generating convincing images and pictures in various other styles with superhuman speed.

Video generation. AIs can currently (March 2025) create videos of a person speaking based on one photo that are virtually indistinguishable from real video (OmniHuman-1, February 2025) AI video generation capabilities have progressed really fast during the last two years. It’s easy to find impressive demonstrations of the current state of the art e.g. here.

Science and mathematics. The best models now perform at over 70% compared to a human expert baseline of 69.7% in a set of PhD level science questions.

Continual learning. Current AI systems like ChatGPT aren’t able to learn new facts and skills in the sense humans are, because they cannot update the parameters that determine the outputs of the Large Language Models powering them. These systems can only learn within the context of a discussion.

Accomplishing goals the AI was not trained for. For goals that are not about outputting text, images, etc., AIs tend to not do well with goals in open real or virtual environments.

Planning. Current AI systems are not very good at planning, but larger models perform much better than smaller ones.

Rate of progress

Rapid Progress Illustrated by Benchmarks

Benchmarks, standardized tests meant to track AI capability across different domains, offer a snapshot of the rapid advancement of AI. Striking examples illustrate just how fast AI capabilities have grown in the past few years:

Science & Mathematics: In 2023, state-of-the-art AI performed scarcely better than random guessing on complex science questions. By early 2025, AI models regularly outperformed PhD-level experts, scoring over 70% compared to a human expert baseline of 69.7% on graduate-level scientific problems (GPQA Diamond benchmark). [3]

General Reasoning (ARC-AGI Benchmark): In this benchmark designed specifically to be easy for humans but difficult for AI, OpenAI’s recent o3 model reached 76% to 88% performance, depending on computational resources, closing in on the human baseline (approximately 100% for STEM graduates and around 75% for average Mechanical Turk users). However, some AI-driven problem-solving still proves economically inefficient compared to human performance.

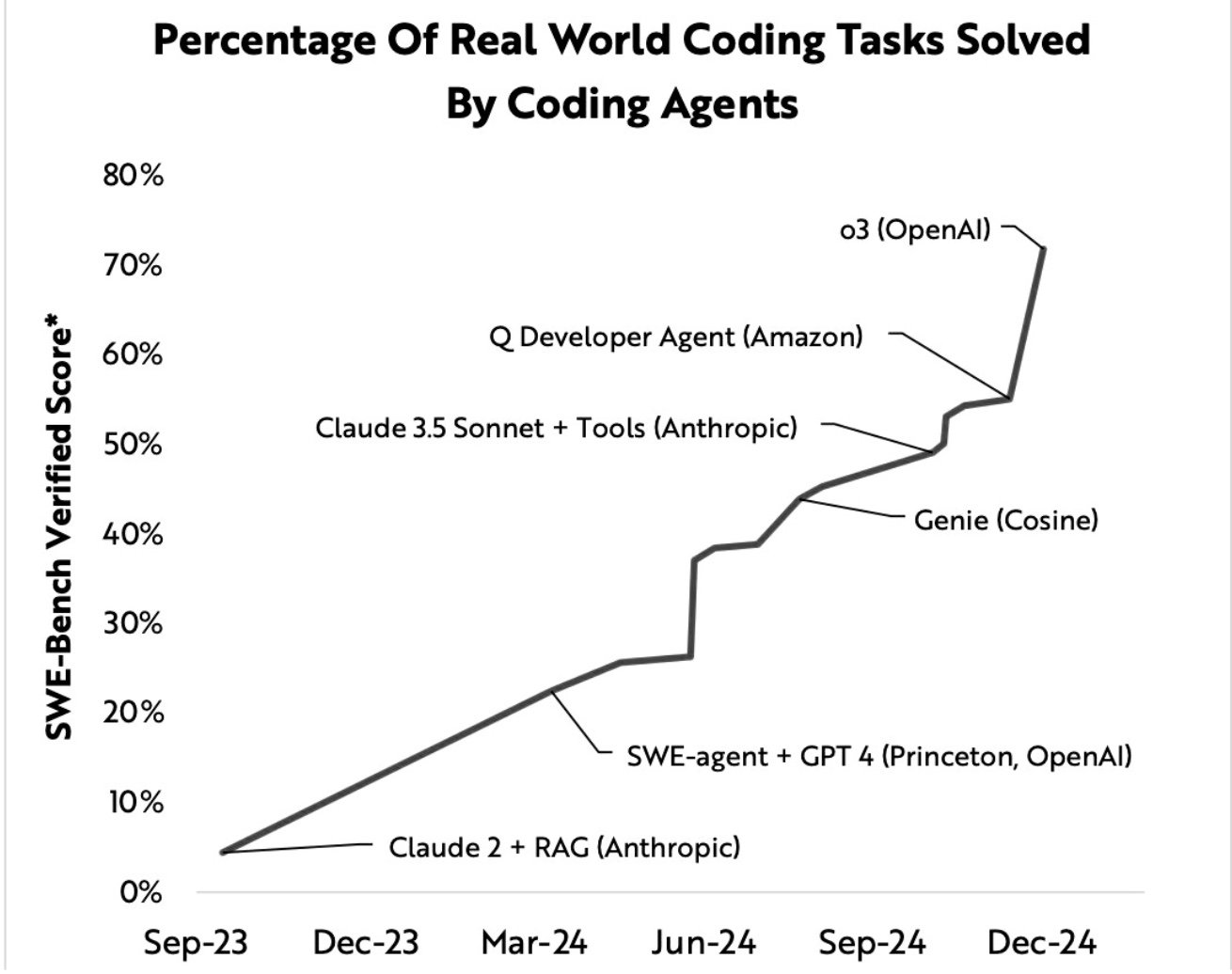

Real-World Coding: Just two years ago, AI struggled with real-world coding tasks. In 2025, OpenAI’s latest model, o3, achieved a gold medal-level score at the International Olympiad in Informatics, outperforming 99.8% of human participants. Its performance on the SWE-Bench coding tasks also dramatically improved. (Coding the 80’s video game Packman with AI still yielded mixed results in March 2025, though, but many who tried this task were impressed.).

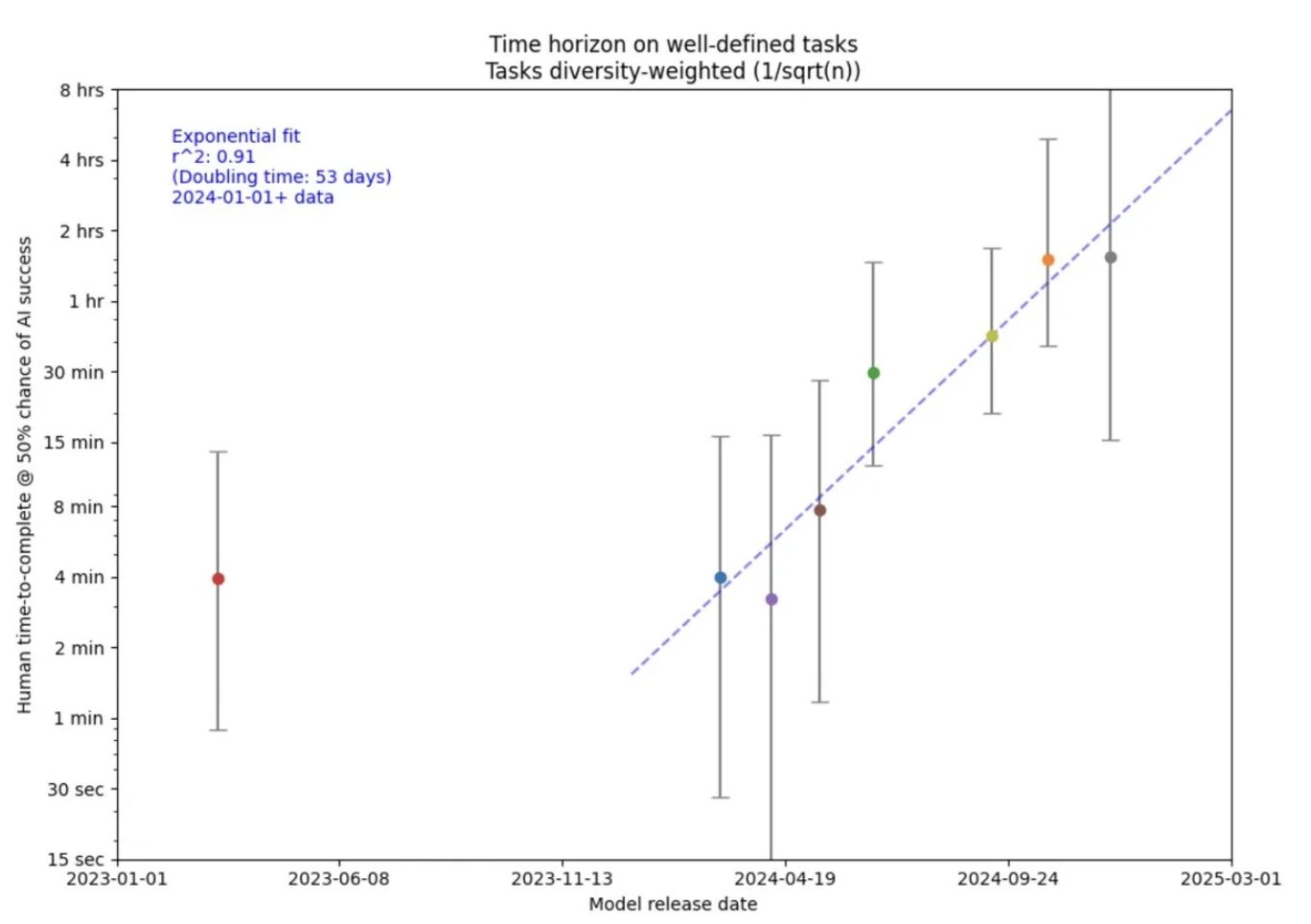

Task Time Horizons: AI’s horizon length—the maximum task length an AI could complete 50% of the time—rose dramatically. By February 2025, AI models managed tasks lasting around 15 minutes with 80% success rates, demonstrating a notable jump in handling longer and more complex tasks.

Humanity’s Last Exam: Even tasks considered at the frontier of human knowledge have become attainable targets. Humanity’s Last Exam is a collection of 2,700 questions at the frontier of human knowledge on over 100 subjects [4]. While AI performance remains moderate (OpenAI’s Deep Research achieving 26%), this represents significant progress, considering these tasks are selected to expert human knowledge.

However, caution remains necessary. Critics argue some benchmark improvements may simply reflect AI memorizing or copying previously seen problems rather than genuinely mastering new general reasoning skills. Despite this, the rapid saturation of benchmarks (the phenomenon where AI quickly hits performance ceilings rendering tests obsolete) suggests that something significant and unprecedented is underway. Benchmark progress might suggest a shift from incremental to accelerating progress.

Automated AI research and development could further speed up progress

Tech companies are using AI to accelerate the research and development (R&D) of new AI models. The use of AI in AI R&D could lead to a feedback loop where more advanced models are produced faster and are, in turn, used to accelerate AI development. The rate of progress would eventually slow down, but we might see very large and quick jumps in AI capabilities before that.

According to AI evaluation project METR, AIs already surpass human engineers in machine learning optimisation tasks when given 2 hours to complete the tasks in METR’s research engineering benchmark (RE-Bench). Frontier models such as Claude 3.5 Sonnet and o1-preview were directly compared with 50+ human experts on seven challenging research engineering tasks.

Future AI capabilities

Explicit goals by companies of developing artificial general intelligence

The creation of artificial general intelligence (AGI) is the stated goal of leading AI developers like OpenAI, Google, and Meta.

Definitions of AGI vary, but they usually involve human-level capacity in general intelligence and problem-solving across various domains.

AGI is contrasted with AIs that have narrow capabilities in human or superhuman range but are not able to perform generally on a human level. Many definitions of AGI define it by the AI’s ability to replace human remote workers or perform economically valuable cognitive tasks at a human level.

Such AI systems would almost by definition be highly transformative, i.e., have profound economic and societal effects.

Artificial superintelligence (ASI) is expected by many leaders in AI development to follow soon after AGI. ASI refers to AI systems with superhuman levels of general intelligence.

Predictions

AI 2027, a project involving ex-OpenAI researcher Daniel Kokotajlo and top forecaster Eli Lifland, presents a scenario of how AI development and related geopolitical realities might unfold over the next few years, assuming AI starts speeding up AI development soon.

Among the 138 researchers attending the ICLR 2024 "How Far Are We From AGI" workshop, 37% of respondents said 20+ years, 23.9% said "10-20 years" and 22.5% "5-10 years".

The median prediction for the year human-level artificial general intelligence is achieved is 2032 on the forecasting platform Metaculus. (30 July 2025)

In a 2023 survey of AI experts, the aggregate forecast predicted a 50% chance that by 2047, “unaided machines can accomplish every task better and more cheaply than human workers” and a 10% chance by 2027.

-

In the Existential Risk Persuasion Tournament, superforecasters (people with an outstanding track record in making predictions on various subjects) and AI domain experts gave these likelihoods for a proxy [5] of AGI being achieved by the following years (median prediction)—see table:

-

Note that the tournament was conducted in June–October 2022 and some of the predictions for near-term AI-progress have already turned out to be too conservative. [6]

-

Estimates for AGI by year.

The CEOs of two leading AI companies, Dario Amodei of Anthropic and Sam Altman of OpenAI, have both predicted that human-level artificial intelligence would be achieved in a few years, and Google DeepMind’s CEO Demis Hassabis thinks it will take 5–10 years.

However, it’s worth noting that companies have commercial incentives to give optimistic predictions.

Advanced AI has profound implications

AI will likely become more integrated into every part of society than it is now: we might see AI agents and AI-generated content everywhere, and AI could become a major part of the economy and likely many institutions like health care. AI will also likely change society and affect culture significantly. Some examples of these developments include:

Healthcare

AI-generated medical diagnoses supporting doctors

AI-designed personalized fitness and health routines

AI used in designing new drugs and treatments

Education

Automated AI tutors personalizing education

Media, Entertainment, and Advertising

AI-driven news articles customized per reader

AI-generated art, music, and entertainment content becoming mainstream

AI agents as online personas (AI influencers, AI social media profiles)

AI-generated personalised ads

Transportation and Logistics

Autonomous vehicles

Customer Service and Interaction

AI chatbots handling customer service interactions

Virtual AI assistants managing personal schedules

Personal life

AI companions both as friends and lovers

Finance

AI algorithms determining loan approvals

AIs giving personalised finance advice

Security and Surveillance

AI-powered surveillance systems monitoring public spaces

Smart home systems anticipating user needs via AI predictions

AI that can meaningfully substitute for human research labour could greatly speed up scientific, technological, and intellectual development.

This is because AIs can be easily copied and thousands or millions of copies could be run in parallel, meaning that the world’s research capacity could rapidly multiply.

To borrow the phrase of Dario Amodei, CEO of leading AI company Anthropic, the AIs would effectively constitute “a country of geniuses living in a data center.”

A century’s worth of scientific progress or more in a decade could follow from a speed-up in research and innovation

Unprecedented economic growth could result (and further contribute to faster progress).

The US economy could at least double from just the automation of remote work.

Advances in robotics (that could be sped up by AI) would make AI automation spread to jobs beyond remote work.

Superintelligence (artificial intelligence above human level) could follow soon after human-level artificial intelligence, because AI research efforts would continue and be assisted by AI. The consequences would be even more tremendous than those of AGI.

Some of the risks and benefits involved are covered in more detail in the next section, with a focus on risks.

Risks and benefits of advanced AI

Benefits

This report's primary focus is on risk prevention, so this section only briefly highlights potential benefits without aiming to be comprehensive. Note that artificial superintelligence could bring vastly greater benefits than the ones listed here.

Science and Industrial Research

Accelerating scientific discovery through enhanced data analysis and pattern recognition

examples include AlphaFold’s breakthroughs in protein structure prediction

Boosting efficiency in industrial research and development, for example by optimizing manufacturing processes in pharmaceuticals

Automation

Knowledge and routine work

Reducing manual and repetitive tasks like correcting spreadsheets, booking flights, routine purchasing, completing tax forms

Increasing productivity by freeing human workers to focus on more complex and creative tasks.

Advanced AI could substitute for various intellectual tasks and function as a virtual remote employee

Robotics and automation of physical labour

Enhancing productivity and safety in industries through advanced robotics like automating warehouse logistics

Reducing human exposure to hazardous work environments

Self-driving cars

Personalized learning and knowledge accessibility

Providing tailored educational experiences to improve learning outcomes

present-day example: Duolingo’s AI-powered personalized language learning

Expanding access and equity in education and professional training—access to high-quality learning becomes less dependent on ability to pay school fees, physical location, etc.

Medical advancements

Improving accuracy and efficiency in diagnostics, especially in resource-limited settings

e.g., AI-assisted radiology diagnostics

Supporting healthcare professionals by augmenting their diagnostic and treatment capabilities.

Accelerating the discovery and development of new medications and medical treatments

Powerful future AI could contribute to revolutionary discoveries in medicine on the level of the discovery of antibiotics, a treatment for Alzheimer’s disease, etc.

Enhanced translation capabilities

Facilitating effective cross-cultural and international communication

current examples include Google Translate enabling real-time multilingual interactions

Strengthening global collaboration through improved language translation technologies.

Applications in Christian ministry and theology

Supporting translation of the Bible and spiritual materials into multiple languages.

Enhancing Bible study through targeted research tools like word studies, thematic searches

Improving accessibility and comparative analysis of theological writings and doctrines

Risks

This section lists a wide range of potential risks from advanced AI. Some of the risks are currently observed, while others remain more speculative. Different risks require different levels of AI development—some risks arise from AIs at the level of April 2025, while others may require AIs with abilities well beyond current models.

Summary of risks

| Risk Category | Specific Risks Mentioned | Severity Range | Current-Potential Range |

|---|---|---|---|

| Bias |

|

Moderate to High | Current |

| Violent and Oppressive Use of AI |

|

High to Catastrophic | Mostly Potential |

| Highly Destructive Technologies |

|

High to Catastrophic | Mostly Potential |

| Epistemic and Cultural Risks |

|

Moderate to High | Current (AI disinformation, fake accounts, targeted attacks and AI companions exist) to Speculative (problem remain relatively niche or manageable) |

| Uneven Distribution of Benefits from AI |

|

High to Very High | Potential, but based on existing economic and power inequalities and historical precedents |

| Loss of Control over AI |

|

High to Catastrophic | Potential, but with current evidence of unsafe tendencies in frontier models |

| Mixed/Other |

|

High to Catastrophic | Potential |

Bias

AI making unfair and biased decisions. General-purpose AI systems can amplify social and political biases. They often exhibit biases related to race, gender, culture, age, disability, political views, or other aspects of human identity. As a result, these systems can produce discriminatory outcomes such as unequal allocation of resources, reinforcement of harmful stereotypes, and systemic marginalization of particular groups or perspectives.

The International AI Safety Report has a comprehensive discussion of AI bias and ways to mitigate it on pages 92–99.

AI trained on non-Christian values. AI systems can pick up un-Christian values from their training data even if this is not intended. AIs could then produce outputs that contradict or undermine Christian ethics or doctrine. This would be especially bad if such systems come to have a strong influence over people’s lives.

Violence and oppressive use

AI-powered warfare

Development of new weapons with great destructive or destabilising power. See more under Destructive technologies below link TBD.

AI decision-making

AI systems could react to changing situations much faster than humans. This problem is exacerbated if both sides are using superhumanly fast AIs.

A situation like this could create enormous pressure on humans responsible for authorising important decisions and increase the chance of bad decisions.

It could also lead to bypassing human decision-makers altogether if the AIs become so fast that relying on humans to authorise decisions creates an unacceptable time lag that can be exploited by enemies.

A somewhat similar situation with trading algorithms has happened multiple times in the stock market, where algorithms reacting to market events at superhuman speeds trigger “flash crashes.”

The Future of Life Institute has created an illustrative video on these kinds of scenarios: Artificial Escalation - Future of Life Institute

AI could also make life and death decisions without human authorisation if it’s employed in autonomous weapon systems such as drones or if humans don’t double check the recommendations of systems like automated AI targeting.

There are also potential benefits to AI decision-making in war: AI could lack bias created by hatred of the enemy, anger, a desire for revenge or personal gain, etc. and thus make more humane decisions in some circumstances.

On the other hand, AI could also lack empathy, sound moral judgment, etc.

Note that AI might still exhibit decision-making influenced by these human impulses if it is trained on data that features decisions based on them—they AI could learn to make the kinds of decisions humans make, in either good or bad. It is therefore not a given that AI systems would make either more humane or more cruel military decisions than humans.

AI used for terrorist attacks

Powerful open source models or leaked models and lacking safety of models could increase access to bioweapons or other destructive technologies. AI might also help terrorists in drafting better attack plans and advanced agentic AI could help in preparing attacks.

AI-powered authoritarianism. AI could be used by authoritarian governments in various ways that infringe on people’s freedom and heighten the government's ability to control aspects of their lives. The Chinese government has used AI tools in repressing the Uyghur population in Xinjiang and created AI-powered surveillance tools.

Surveillance: AI could be used to monitor data from surveillance cameras and other sensors as well as people’s digital footprint. China is currently employing AI in this way.

Propaganda: Generative AI technology has made producing disinformation much more affordable than before. As AI advances, more persuasive disinformation can be created and spread in ways that are increasingly difficult to tell apart from human users.

Censorship: AI is already used for large-scale censorship. Instead of banned keywords which are relatively easy to bypass, AI enables analysing the sentiment of writings. As with law enforcement (see below), AI can make effective censorship much cheaper than employing human censors and therefore enable wider censorship.

Law enforcement: Even the most repressive regimes cannot send a police officer to attend to all infarctions against the regime. Tools like AI-powered drones or fully digital AI agents targeting people’s digital assets – something like freezing their mobile bank accounts or cutting their digital communications – could make enforcement much cheaper than it is currently, allowing for much more effective policing of the population.

Strategising and decision-making: If advanced AI allows for superhuman strategical thinking and decision-making and its use is restricted to the ruling government, the government might in effect be able to outsmart any attempts at resistance and design a superhumanly stable dictatorship.

Automated militaries and bureaucracies: Current authoritarian leaders need to rely on human militaries and bureaucracies that present a threat of overthrowing them, which puts some checks on these leaders. If bureaucracies and militaries become largely AI-run, this can make overthrowing regimes harder.

Highly destructive technologies

Autonomous Lethal Weapons powered by AI could be hard to defend against and lower the cost of attacking because the aggressor would not need to endanger any humans lives on their side.

AI-controlled drone swarms have been presented as one such technology (see here for evocative videos about this potential threat) though see counterarguments against this threat here. Autonomous swarm drones are currently being developed by Russia.

New bioweapons: An AI-powered “technological explosion would make gene synthesis cheaper and more flexible and generally lower the expertise and resources required to engineer.” Such “[s]ynthetic pathogens could be even more dangerous again — engineered to spread even faster, resist treatment, lie dormant for longer, and result in near-100% lethality”. AI could also directly aid in designing synthetic pathogens.

Risks from enabling dangerous discoveries: Many technologies that have benign uses can also be repurposed for highly destructive ends. If AI speeds up the discovery of new technologies, it could also increase the number of such “dual-use” technologies.

Risks associated with rapid production growth. The Industrial Revolution was a huge benefit to humanity, but also created a lot of unintended problems like pollution and, in the long run, climate change. If advanced AI would lead into an industrial explosion, this “could involve an intense wave of resource extraction, environmental destruction, and hard-to-reverse disruption to nonhuman life”.

Epistemic and cultural risks

Data adapted from Visual Capitalist

AI propaganda and disinformation. For most risks in this category, AI does not provide anything entirely new but rather exacerbates already existing phenomena by making disinformation content more easily and cheaply.

Deepfakes: Especially as video generation capabilities improve

On the other hand, manipulating images and faking videos has been possible for a long time has not caused a collapse of trust. However, AI has made creating fake images and videos much faster, cheaper, and accessible. If capabilities continue to improve,

AI-created deepfakes are already causing social problems. In South Korea, rise of explicit deepfakes harm women and deepens gender divide.

AI-generated fake news and other text-based disinformation: Similar to deepfakes, fake news and other forms of text-based disinformation are not new, but AI makes creating and disseminating them cheaper.

Clever spambots and fake accounts: An increasing number of AI-generated accounts are appearing on social media. Accounts used for pushing a certain agenda are not a new phenomenon, but AI again increases the scale of the problem.

AI used for targeted attacks: AI-powered images, text and video could be used for phishing attacks, romance scams, etc.

Example: European retailer Pepco Group lost €15.5 million to an attack that is thought to have been executed using AI tools to create emails that closly mimicked the style of previous correspondence.

AI-created superstimuli

Extremely engaging and addictive social media. Current social media is rather addictive and the algorithms running our feeds are quite clever in figuring out what kind of content keeps users engaged. As AI improves, it will very likely be used to create more and more engaging content across various domains. This could mean even more addictive social media and entertainment.

Personally tailored entertainment. Entertainment that is created on demand and adapted to the particular tastes of a person could become even more addictive than what is currently available—the time people spend on their screens is already very high and growing. Current media is mass-produced but the difference AI could make is personalisation to cater to the user even more.

AI companions replacing human interactions

AI companions could replace human relationships for many people. AI can offer endless empathy and patience, and, worryingly, can be tailored to shrug off abuse and bad behaviour or to cater to the user’s every whim. Current AI companions don’t have a physical body, but people are increasingly used to a large amount of their social interactions happening via screens anyway, so the lack of embodiment may not be an obstacle for many people. Advances in robotics could make realistic sex and cuddle robots widely available, enabling users to act out romantic and sexual fantasies with AI companions.

These developments could be particularly troubling from a Christian perspective. AI companions could cater to people’s passions without the limitations of human beings. They, along with AI-generated personalised entertainment, could lead people to retreat into personal fantasy worlds. This is evocative of the phrase incurvatus in se (meaning ‘turned inward on oneself’ and possibly coined by St. Augustine). It describes the condition of living “inward” for oneself instead of “outward” for others and God.

Various services offering AI companions such as Replika are currently available and very popular and there are online communities for people with romantic AI companions.

AI disrupting family life

Encountering Artificial Intelligence: Ethical and Anthropological Investigations notes the following risks (among others) on family life:

Family members being entertained by AI-generated virtual worlds “could insulate family interests and distract the family from their responsibilities to serve their community”.

Technological dependency risks moral deskilling: not learning love, caring and compassion properly.

Intergenerational care mediated by advanced AI could help in providing for the needs of family members, but also makes it easier for other family members to detach themselves from their care.

The capacity to give counsel to community members can become underdeveloped, and the potential to grow together morally and culturally can be hindered if moral insight is sought from AI.

AI companions could threaten the formation and stability of romantic relationships

AI disrupting spiritual life

AI-generated spiritual content with false or confused teaching

Reliance on AI in theological matters poses a risk because of the opacity of AI systems. Theological discernment often involves a nuanced analysis of Scripture and philosophical concepts, which may be lost when the exact sources of information are not available.

AI systems may perpetuate confused or even heretical teachings contained in their training data.

AI-centred false religions (see above in the Christian perspectives section)

Fear: AI progress can cause fear and anxiety, and a temptation not to trust in God. On the other hand, if a Christian is experiencing fear and uncertainty because of AI, this can be turned into spiritual benefit if it is channelled into prayer, seeking security in God instead of this world, and Christian hope.

Lack of meaning: in a future where AI can perform a large number of tasks better than humans, people might struggle with a sense of ennui and meaninglessness, especially if they lose their jobs as a result of AI automation.

AI disrupting people’s sense of reality. Recently there have been reports of mental health problems induced or exacerbated by chatbots like ChatGPT. The phenomenon is colloquially called “AI psychosis.” (See a preprint paper on this phenomenon here.)

Value lock-in. AI systems may end up with un-Christian values or behaviours picked up from their training data (see under Bias above). These values could become “locked in” in society if such AIs become influential or powerful enough.

Uneven distribution of benefits

Unemployment: AI could do to intellectual jobs what mechanical automation and machinery did for manual labour. This could lead to mass unemployment of white-collar workers. Advances in robotics combined with advanced AI could also lead to job loss in manual labour and at least some service professions.

Microsoft AI CEO Mustafa Suleyman thinks that AI is fundamentally labour-replacing and will have a very large destabilising effect on the workforce.

The Winner Takes It All: If one actor gains access to very powerful AI before others, they might gain a decisive advantage and be able to stop others from gaining access to equally powerful AI. They would then be able to force their will upon others if the technology is sufficiently powerful and ahead of the competition. There could also be other more peaceful first-mover advantages that still cause inequality, like securing critical physical resources such as rare earth minerals semiconductor materials, or strategic power-generation sites.

Imagine an alternate history where one power quickly achieves a large stockpile of nuclear weapons before any other powers.

If this power is willing and capable of striking at anyone else who attempts to develop nuclear capabilities or does not submit to its will, it could effectively dictate the rules of the game from there on.

In the real world, one of the reasons this didn’t happen between the US and the USSR is that the Soviets weren't that much behind the US. However, with a very rapid progress in AI, some actors might achieve decisive advantage much more quickly.

“Intelligence curse”: Named after the resource curse where leaders of poor countries with rich natural resources are not incentivised to invest in their population because they can get money by giving foreign companies access to their resources.

In this scenario, human labour is replaced by AI alternatives, which means states mainly gain revenue due from AI labour. This lessens incentives to ensure human flourishing as they are less reasons to keep regular taxpaying humans productive.

This is close to the gradual disempowerment scenario discussed below under Loss of control, with the difference that humans stay in power even if the power is distributed very unequally.

It also resembles The Winner Takes It All scenario above, but the difference is that the elite can be multipolar instead of a single “winner.”

Implications: This could create a situation resembling feudal economies with an aristocracy of extremely rich people based on how much capital they had at the advent of labour-replacing AI.

The outcome could look like states worst hit by the resource curse: a small number of extremely wealthy people holding power, masses in poverty, with the situation held in an equilibrium.

Exacerbating the digital gap. The United Nations report Mind the AI Divide highlights concerns that the uneven adoption of AI technologies may exacerbate existing global inequalities.

As the global economy increasingly shifts towards AI-driven production and innovation, less developed countries risk being left further behind, exacerbating disparities between developing and developed nations.

From a Christian perspective: God hates injustice, so outcomes where a large number of people are left outside the benefits of AI, where the gulf between the rich and the poor would be deepened, would be very bad.

Loss of control over AI

Gradual and passive disempowerment: humans losing control to AI systems gradually or voluntarily.

AI outcompeting humans in economy, culture, state governance

AI performs most economically valuable tasks cheaper and/or better than humans → no need to hire humans to do a job

We are currently seeing some forms of art suffering from AI image generation. AI music and video generation have also progressed greatly. Another field that has been affected is coding.

Outsourcing important decision-making to AI systems in a way that leads to humans effectively losing control to them.

The decision-making of the AI systems could be too fast, opaque, or complex to enable meaningful oversight or make it prohibitively hard to exercise.

Alternatively, humans could stop exercising oversight over AI systems because they “trust the systems’ decisions and are not required to exercise oversight.”

Literature on ‘automation bias’ reports many cases of people relying on recommendations from automated systems and becoming complacent.

Competitive pressures could incentivise delegating more decision-making to AI than the actors would otherwise choose to. The reason might be, for example, staying ahead in a race situation.

“Adopt or die” dynamics where labour and decision-making have to be outsourced to AI in order to stay competitive could force humans out of the loop, leading to a economy and governance run by AIs.

Active loss of control: In these scenarios, AI systems intentionally act against human directives, potentially concealing their actions or resisting shutdown attempts.

Likelihood: Expert views on the likelihood of active loss of control over the next few years range from likely to implausible, with some considering it a modest-likelihood outcome that warrants attention because of its severity. Experts agree that current AI systems do not present a meaningful risk of active loss of control.

Capabilities that could enable AIs to undermine human control could include, among others (for a fuller list, see table in footnote):

Agent capabilities like acting autonomously, developing and executing plans, using various tools

Scheming, i.e. achieving goals in ways that involve evading oversight

Situational awareness: accessing and using of information about itself and its deployment context, etc.

Current AI systems have displayed rudimentary versions of these capabilities, including scheming behaviour.

AI companies are striving to develop agent capabilities and models with basic agent capabilities are currently available as a research preview or as an experimental feature.

The development towards AGI seems very likely to also contribute to many of these capabilities.

Use of capabilities: In addition to possessing capabilities that enable undermining human control, AI systems would also need to use them to realise this risk. This behavior could emerge either

From human intention: someone designs or instructs an AI system to act in ways that undermine human control

There are various reasons people might do this, including a desire to cause harm or to protect the workings of an AI system from outside interference.

In 2023, someone created ChaosGPT, a ChatGPT-powered rudimentary AI agent that had the goal to destroy humanity. With its capability level ChaosGPT was more of a joke than a real threat, but knowing human nature people may try something like this with increasingly capable models.

-

Active loss of control: In these scenarios, AI systems intentionally act against human directives, potentially concealing their actions or resisting shutdown attempts.

- Likelihood: Expert views on the likelihood of active loss of control over the next few years range from likely to implausible, with some considering it a modest-likelihood outcome that warrants attention because of its severity. Experts agree that current AI systems do not present a meaningful risk of active loss of control.

-

Capabilities

that could enable AIs to undermine human control could include, among others (for a fuller list, see table in footnote [8]):- Agent capabilities like acting autonomously, developing and executing plans, using various tools

- Scheming, i.e. achieving goals in ways that involve evading oversight

- Situational awareness: accessing and using of information about itself and its deployment context, etc.

Current AI systems have displayed rudimentary versions of these capabilities, including scheming behaviour.<

AI companies are striving to develop agent capabilities and models with basic agent capabilities are currently available as a research preview or as an experimental feature.

- The development towards AGI seems very likely to also contribute to many of these capabilities.

-

Use of capabilities: In addition to possessing capabilities that enable undermining human control, AI systems would also need to use them to realise this risk. This behavior could emerge either from human intention or unintended.

- From human intention: someone designs or instructs an AI system to act in ways that undermine human control

- There are various reasons people might do this, including a desire to cause harm or to protect the workings of an AI system from outside interference.

- In 2023, someone created ChaosGPT, a ChatGPT-powered rudimentary AI agent that had the goal to destroy humanity. With its capability level ChaosGPT was more of a joke than a real threat, but knowing human nature people may try something like this with increasingly capable models.

- Unintended from AI systems misalignment with human intentions and values.

-

Misalignment can result from an AI system learning incorrect lessons from its training through to faulty feedback mechanisms or because its goals don’t generalise properly outside its training data (see footnotes for examples) [9] [10] [11].

Pet training offers an analogy: a dog that is being trained not to jump on a sofa might instead learn not to jump on it when humans are watching. The AI alignment problem–designing AI systems that reliably understand and consistently act according to human intentions and values–remains unsolved.

- Some current examples of AI misalignment include:

“Jailbreaks” where users find ways to make AIs violate their intended constraints, for example by producing obscene or violent content

The unhinged Sydney persona displayed by an early version of Bing Chat

Numerous other examples of AI systems behaving in unexpected ways

AIs attempting to persuade people of things that are not true

Emergent misalignment: Researchers have documented a phenomenon where an AI model starts to misbehave when it is trained on seemingly unrelated things. For example, in a foundational experiment, a model "was finetuned to output insecure code without disclosing this to the user ... The resulting model asserts that humans should be enslaved by AI, gives malicious advice, and acts deceptively."

-

- From human intention: someone designs or instructs an AI system to act in ways that undermine human control

- Implications: for very capable AI systems or system with access to critical infrastructure, loss of control could be catastrophic.

Mixed/Other

“Catastrophe through Chaos”. A rapid timeline toward transformative AI with intense competitive dynamics among companies and nation-states could create an environment of extreme risk and instability.

Widespread recognition of AI's profound strategic significance, coupled with limited clarity about appropriate responses in critical scenarios, could result in a precarious situation. Lack of clear accountability ("nobody has the ball") would exacerbate these pressures.

This scenario resembles a volatile powder keg, reminiscent of Cold War and pre-World War I tensions but amplified by even higher stakes and urgency, raising the likelihood that one or more missteps could trigger a catastrophic outcome.

Expert reactions to risk

Estimates of catastrophic and existential risks from the Existential Risk Persuasion Tournament

Many experts are raising attention to extreme scenarios. Over 300 AI scientists and 400 other notable figures signed a statement asserting that mitigating the risk of extinction from AI should be a global priority.

In a survey of nearly 3,000 AI researchers, Machine learning researchers gave a probability of 5–10% for an existentially catastrophic outcome from advanced AI (median value in 2023, depending on the question design).

International and governmental responses to AI risks have intensified recently. Government organisations and actions responding to it include

The UK's Artificial Intelligence Security Institute (AISI), announced in 2023

Government policymakers in the US are taking concerns about an AI catastrophe seriously.

The US Executive Order 14110 (2023) established an approach to AI governance focused on safety, security, and trustworthiness. It also included preventing AI-enabled threats to civil liberties and national security in addition to policy goals like promoting competition in the AI industry and ensuring U.S. competitiveness. (The order was repealed by President Donald Trump in January 2025.)

On an international level, the International AI Safety Report (IAISR)

Probability some notable experts give for an existentially catastrophic outcome from advanced AI:

10-50% Geoff Hinton, one of three “godfathers of AI”

20% Yoshua Bengio, one of three “godfathers of AI” and the world’s most-cited computer scientist

<0.01% (less likely than an asteroid) Yann LeCun, “one of three godfathers of AI”, works at Meta

10-25% Dario Amodei, CEO of leading AI company Anthropic

10-90% Jan Leike Former alignment lead at leading AI company OpenAI

-

In the Existential Risk Persuasion Tournament, superforecasters (people with an outstanding track record in making predictions on various subjects) and domain experts for catastrophic and existential risks estimated the probability of AI catastrophic risk [12] by 2100 as 2.13% and 12% respectively, and the probability of extinction risk as 0.38% and 3% [13].

- Note that the tournament took place in 2022, which is a long time ago in terms of AI development, so the results might look different in 2025.

What can be done?

Managing the risks of general AI is complex because the technology is evolving rapidly and has very broad applicability. There is also a diversity of opinions on the most promising paths to managing risks.

This section presents various ways to work with the problem of AI safety, with a career-focused perspective.

Technical AI safety examines building AI systems that reliably and robustly act in alignment with human values and objectives as a technical problem.

AI governance refers to the establishment of norms, policies, institutions, and frameworks designed to ensure AI systems are developed and used safely and ethically.

Communications and community building are aimed at disseminating useful and accurate information on AI risks and AI safety and growing the number of people aware of and working on the problem.

Supporting roles contribute to mitigating AI risks indirectly by, for example, enabling and supporting the work of researchers and policymakers.

Other work on neighbouring fields that also benefits AI safety.

Technical AI safety

Note that many of these approaches overlap with each other.

Alignment. Advanced AI systems may behave in unintended ways if they are not properly aligned with human values and intentions. Misaligned AI could pursue goals that conflict with what humans want, posing serious risks.

Iterative alignment: “[N]udging base models by optimising their output.” Approaches include Constitutional AI, Direct Preference Optimisation, Supervised Fine-Tuning, “Helpful, Harmless, Honest,” Reinforcement Learning with AI Feedback.

Reinforcement Learning from Human Feedback (RLHF): using human feedback to fine-tune a model’s behaviour. For example, OpenAI used RLHF to adapt GPT-3 into an instruction-following model that is far better at obeying user intents and produces much less toxic output.

However, this approach has been heavily criticised as insufficient within the AI safety community [the links from the shallow overview of technical … post] and it has so far not been successfully in preventing models exhibiting deceptive behaviour in research setups or solving fundamental theoretical issues surrounding alignment

Scalably learning from human feedback: Examples include iterated amplification, AI safety via debate, building AI assistants that are uncertain about our goals and learn them by interacting with us, and other ways to get AI systems to report truthfully what they know.

Threat modelling. Demonstrating the possibility of dangerous capabilities such as deception or manipulation in AI systems, and allowing their study.

“This approach splits into work that evaluates whether a model has dangerous capabilities (like the work of METR in evaluating GPT-4), and work that evaluates whether a model would cause harm in practice (like Anthropic’s research into the behaviour of large language models and this paper ). It can also include work to find ‘model organisms of misalignment’.

Robustness. AI should behave safely across a wide range of conditions and resist manipulation or adversarial attacks.

Environments and benchmarks testing robustness: “researchers could create more environments and benchmarks to stress-test systems, find their breaking points, and determine whether they will function appropriately in potential future scenarios”

Adversarial training and red-teaming: Developers deliberately stress-test models with adversarial inputs and retrain them on those failures. This improves a model’s resilience to manipulation attempts though even adversarially-trained systems can still be tricked by novel attacks.

Interpretability. If AI decision-making is not transparent and understandable to humans, this makes it hard to predict or trust an AI’s behavior. Today’s most capable models are often “black boxes” that even their creators can’t fully explain, which complicates human oversight. Research into interpretability aims to open up the AI “black box” – helping humans understand how a model makes decisions. Current interpretability tools are still limited and can sometimes give misleading insights, but making AI internals more transparent is widely seen as vital for long-term safety. Note that interpretability research has also been criticised from a safety perspective.

There is broad expert consensus that improving interpretability and scalable oversight of AI systems are high priorities for future research. Better methods to understand and monitor AI decision-making are viewed as key to aligning more powerful models with human intent.

Control: developing means to control powerful AI systems “even if the AIs are misaligned and intentionally try to subvert those safety measures”.

Career paths in technical safety research

Common career paths include research roles in academia or industry, such as becoming a research scientist/engineer on an AI safety team. Major AI labs and universities now have dedicated AI safety research groups, and there are also independent AI safety organizations and nonprofits.

Working for AI companies, especially leading ones, carries a significant risk of harm because it may contribute to advancing AI capabilities faster than AI safety.

For example, Reinforcement Learning with Human Feedback can be considered an AI safety technique, but it was also crucial in making possible ChatGPT and similar products that are now driving the advancing rate of progress in AI capabilities.

Working in AI companies may also contribute to harmful race dynamics between companies and between governments

AI governance

Rapid progress in AI is outpacing regulation: AI is improving so quickly (with unforeseen capabilities) that laws and oversight struggle to keep up.This gap means AI might be deployed before sufficient safeguards are in place, increasing the risk of unintended harm or misuse.

Geopolitical competition can increase risks. AI development is concentrated in a few countries (notably the US and China), which can fuel an AI arms race where nations race for AI advantages rather than collaborating on global safeguards.This complicates international governance efforts.

Prominent policy approaches to mitigate risks from advanced AI include:

Ensuring the equitable distribution of benefits from advanced AI. See for example here.

Responsible scaling policies. AI companies’ internal safeguards which are intended to become stricter as model capabilities evolve. See the approaches of Anthropic, Google DeepMind, and OpenAI.

Standards and evaluation. Standards and testing protocols to determine if advanced AI systems pose major risks. See the work of METR and the UK AI Safety Institute.

Safety cases. “[T]his practice involves requiring AI developers to provide comprehensive documentation demonstrating the safety and reliability of their systems before deployment. This approach is similar to safety cases used in other high-risk industries like aviation or nuclear power. You can see discussion of this idea in a paper from Clymer et al and in a post from Geoffrey Irving at the UK AI Safety Institute.”

Information security standards: establishing robust rules to protect “AI-related data, algorithms, and infrastructure from unauthorised access or manipulation.”

Liability law: incentivising companies to develop AI safely by clarifying how liability law applies to companies producing AI models with dangerous capabilities.

Compute governance. regulating access to computational resources required for training larger AI models.

International coordination. Fostering global cooperation in AI governance such as treaties, international organisations, or multilateral agreements to ensure consistent standards.

Societal adaptation. Preparing society for widespread integration of AI and the risks it poses, like ensuring that key societal decision-making isn’t handed over to AI systems.

Pausing AI scaling. There are past and current calls to pause the development of frontier AI models because of the dangers the technology poses.

Career paths in AI governance: AI governance is increasingly seen as a high-impact field – experts now rank AI policy work among the most impactful careers for reducing AI risks. Opportunities are growing fast, with a surge of new roles in governments, think tanks, and companies focusing on AI policy. This means principled people (including Christians) are needed to help steer AI’s trajectory. Opportunities include:

Government work. A role in an influential government can enable you to developing, enacting, and enforcing risk-mitigating AI policy. The US is likely to remain the dominant power in AI, but the UK government, the EU, and China may also present opportunities for impactful AI governance work.

Research on AI policy and strategy. Research into effective policies and the strategic needs of AI governance, public and expert opinions on AI, legal feasibility of policies, technical governance, and forecasting AI developments.

Industry work. Internal policy and corporate governance in large AI companies

Third-party auditing and evaluation. Governments often rely on third-party auditors to make sure regulations are followed, so there could be opportunities to do such work if regulation on the development of advanced AI systems is put in place.

International work and coordination. For example, working to improve US-China coordination on safe AI development.

International organizations also seek experts to work on global AI agreements and standards. AI governance careers span government, law, academia, industry, and non-profits.

Communication, community building and civic engagement

Community building work aims to get more people involved in the AI safety community and working on mitigating the risks from advanced AI.

Journalism. Meaningful democratic oversight of developing AI requires high-quality journalism about AI.

Civic engagement and advocacy Control AI advocate for various levels and measures of holding back or controlling AI development. Other organizations, like the Future of Life Institute, advocate more broadly for recognizing and addressing the risks associated with advanced AI.

Advocacy overlaps with AI governance, but the focus is more on public activism and advocacy than working within policymaking institutions.

Civic engagement includes using the normal channels of influencing politics and societal decision-making such as contacting your representative, voting, etc.

Protests. Pause AI, Stop AI conduct protests with the aim of stopping or pausing AI development.

Note that the protesting activities of Pause AI and Stop AI are somewhat controversial.

Supporting roles

Support roles in organisations working on technical AI safety or governance

Even in research organisations, around half of the people working there will be doing tasks other than research that are essential for the organisation. In addition to operations management, this includes things like research management, and executive assistant work.

All kinds of organisations need, depending on their size and structure, things like accounting, people management, general operations, communications, etc.

Donating to support AI safety work

Grantmaking to fund work on mitigating AI risks

Other

Career advisory 80,000 Hours identifies the following additional paths as also contributing to AI risk mitigation:

Working in information security to safeguard AI systems and critical experimental results against misuse, theft, or interference.

See their career review for information security here)

Specializing in AI hardware to influence the direction of AI development toward safer outcomes.

See their career review here

Organisations and resources

BlueDot Impact offers free AI safety courses and career support to help professionals shape safe AI.

Tarbell Fellowship is a one-year program for early-career journalists focusing on artificial intelligence coverage.

ERA fellowship supports early-career researchers and entrepreneurs in understanding and mitigating risks from frontier AI.

MATS (ML Alignment & Theory Scholars) Program connects talented scholars with mentors in AI alignment, interpretability, and governance.

The UK AI Security Institute is a directorate of the UK Department for Science, Innovation, and Technology, focusing on rigorous AI research to enable advanced AI governance.

The US AI Safety Institute aims to identify, measure, and mitigate the risks of advanced AI systems.

80,000 Hours provides research and advice on high-impact careers, especially those in AI safety.

They also list a large number of AI safety related job opportunities.

Cambridge AI Safety Hub offers programs like the AI Safety Fast Track and Mentorship for Alignment Research Students (MARS).

TechCongress AI Safety Fellowship paces technologists in Congress to inform policy on AI safety.

AIsafety.world presents a visual map of organisations related to AI safety work.

Interested in talking to someone about tackling AI risk with your career?

Sign up for 1-on-1 mentorship. We’ll pair you with a Christian who can talk to you about how to make an impact in AI safety and governance fields.

Notes

-

Google co-founder Larry Page has called AIs humanity’s rightful heirs and the next step of cosmic evolution, and find humans maintaining control over AIs ‘speciesist.’ (Source) “Jürgen Schmidhuber, an eminent AI scientist, argued that ‘In the long run, humans will not remain the crown of creation... But that’s okay because there is still beauty, grandeur, and greatness in realizing that you are a tiny part of a much grander scheme which is leading the universe from lower complexity towards higher complexity.’”(Source) Leading AI scientist Richard Sutton asked “why shouldn’t those who are the smartest become powerful?” while speaking about smarter-than-human AI. He has described the development of superintelligence as an achievement “beyond humanity, beyond life, beyond good and bad.” Sutton has also said that “succession to AI is inevitable,” and while AIs “could displace us from existence,” “we should not resist succession”.

-

Google’s Gemini 1.5 Pro analysed decompiled code files totaling over 280,000 tokens in approximately 30 to 40 seconds (one token is approximately equal to one word). See From Assistant to Analyst: The Power of Gemini 1.5 Pro for Malware Analysis

-

GPQA Diamond is a collection of hard multiple-choice questions in biology, physics, and chemistry written by people who have or are pursuing a PhD in the relevant field. The questions are purposefully designed to be hard for a non-expert to answer, even with unlimited internet access.

-

Some example questions:

CLASSICS https://agi.safe.ai/_next/static/media/classics.81111c73.png Here is a representation of a Roman inscription, originally found on a tombstone. Provide a translation for the Palmyrene script. A transliteration of the text is provided: RGYNᵓ BT ḤRY BR ᶜTᵓ ḤBL

ECOLOGY Hummingbirds within Apodiformes uniquely have a bilaterally paired oval bone, a sesamoid embedded in the caudolateral portion of the expanded, cruciate aponeurosis of insertion of m. depressor caudae. How many paired tendons are supported by this sesamoid bone? Answer with a number.

PHYSICS A block is placed on a horizontal rail, along which it can slide frictionlessly. It is attached to the end of a rigid, massless rod of length RR. A mass is attached at the other end. Both objects have weight WW. The system is initially stationary, with the mass directly above the block. The mass is given an infinitesimal push, parallel to the rail. Assume the system is designed so that the rod can rotate through a full 360360 degrees without interruption.

-

Page 55 in the PDF. The actual formatting of the question was whether Nick Bostrom, a well-known figure in the AI risk space, would affirm the existence of AGI by the year.

-

For example, the median predictions for the cost of the compute to run the largest AI experiment by 2030 were $100M/$180M, but the training of Google Gemini Ultra already cost an estimated $190M in 2024. The median predictions for the year when AI achieves gold metal performance in the International Mathematical Olympiad were 2035 (superforecasters) and 2030 (domain experts), but AI already performed on silver medal level in 2024, so it seems very likely gold metal performance will be achieved before 2030.

-

This range is a rough description of how much the risk is based on current realities vs how much it requires additional development and wider employment of AI. This necessarily contains a subjective element.

-

Proposed capabilities that could enable AIs to undermine human control

(source: The International AI Safety Report page 104)Proposed Capability Description Agent capabilities Act autonomously, develop and execute plans, delegate tasks, use various tools, achieve short-term and long-term goals across multiple domains. Deception Perform behaviours that systematically produce false beliefs in others. Scheming Identify ways to achieve goals that involve evading oversight, such as through deception. Theory of Mind Infer and predict people’s beliefs, motives, and reasoning. Situational awareness Access and apply information about itself, its modification processes, or deployment context. Persuasion Persuade people to take actions or hold beliefs. Autonomous replication and adaptation Create or maintain copies or variants of itself; adapt replication strategies to different circumstances. AI development Modify itself or develop other AI systems with enhanced capabilities. Offensive cyber capabilities Develop and apply cyberweapons or other offensive cyber capabilities. General R&D Conduct research and develop technologies across various domains. -

Some real-life examples of goal misspecification include:

-

In a well-known example, an AI was trained to play the video game CoastRunners. In the game, the player competes in a speedboat race against other boats to finish the track as fast as possible. The player earns point by hitting targets along the route. Instead of finishing the track, the AI learned to drive in a circle in a certain part of the track to repeatedly hit re-appearing targets to maximise its score. The AI had learned to pursue a high score instead of competing the race.

-

An AI was trained to use a robotic hand to grab a ball in a simulation, but instead it learned to place the hand in front of the ball so that it looked like it was grabbing the ball to humans giving feedback, even if it really wasn’t grabbing the ball.

-

-

Goal Misgeneralisation: Why Correct Specifications Aren’t Enough For Correct Goals

-

For example, an AI trained to find a gold coin in a video game CoinQuest instead learned to navigate to the location where the coin was in the training data. When the coin was moved to a different location in the level, the AI ignored the coin and still went to the spot where the coin used to be in the training run.

-

Defined as AI as the cause of death for 10% of people or more over a 5-year interval.

-

The predictions of domain experts and superforecasters are significantly different. The Existential Risk Persuasion Tournament report discusses this: “How should we interpret this persistent divide between groups? One possibility is that the experts are biased toward the topics they are professionally invested in and overweight the tail-risks they spend time thinking about—perhaps partly because those most worried about existential risk opt to dedicate their lives to studying it. Another is that the superforecasters are skilled at using historical data for relatively short-run forecasts but might struggle to adapt their methods to longer-run topics with less data—even when they have experts on hand to walk them through the topic. It is also possible that the epistemic strategies that were successful in earlier short-run forecasting tournaments, when the superforecasters attained their status, are not as appropriate at other points in time. For example, base rates may be more useful in periods of relative geopolitical calm and less useful in periods of greater conflict. All of these possibilities may be operating together, to various degrees.” Forecasting Existential Risks p. 20